True Zero-shot MT

Teaching Machines a New Language Like Humans

Little over a week ago, Gemini 1.5 reported close to human-level performance on MTOB, a recent challenging translation dataset. In this newsletter, we’ll dig into this result, explore true zero-shot machine translation (MT), and consider how to teach LLMs a new language like humans.

Low-resource MT

To set the scene, let’s first consider what it means for a language to be considered “low-resource”. As with LLMs, the performance of MT models depends on the amount of training data—both parallel and monolingual—available in a given language. As a result, there is a gulf between languages with lots of data and languages with little data in common pre-training corpora. The latter are typically referred to as “low-resource”.1

To bridge the gap between resource-rich and resource-poor languages and make machine translation more accessible, new translation benchmarks have been created that cater specifically to low-resource languages. The Conference on Machine Translation (WMT) now regularly hosts shared tasks on low-resource MT such as for Indic and African languages; workshops such as AmericasNLP support indigenous languages; and large-scale decentralized collaborations such as Masakhane, SEACrowd and Aya created MT datasets for African languages, Indonesian languages, and 100+ languages respectively. Recently, FLORES-200 expands translation data coverage to 200 languages. Beyond theses efforts, through extensive work on data cleaning, filtering, and language identification, researchers have been able to obtain data and train MT models for 1000+ languages (Bapna et al., 2022; NLLB Team, 2022).

LLMs are typically trained on parallel data (Kale et al., 2021) and are increasingly used for translation (Vilar et al., 2023). However, in light of ever-larger pre-training datasets, the opacity of pre-training data, and challenges of language identification on the web (Caswell et al., 2020), it is unclear how much data LLMs have seen in a low-resource language during pre-training. Chances are that most LLMs have seen some data in most languages that are available on the web.

From an experimental perspective, it is thus not straightforward to assess how much data LLMs actually need to learn to translate in a new language. While we could restrict the languages a model is trained on and examine its performance on a held-out language with increasing amounts of training data, we know that most LLM capabilities only emerge at scale. So how can we study the translation abilities of fully pre-trained LLMs in a controlled setting?

True Zero-shot MT

To study this setting, we have to look at translating a language that was truly unseen during pre-training.2 For simplicity, I use the term true zero-shot MT to designate the setting of translating to a language with no pre-training data using only in-context learning data.3

While we can find data for 1500+ languages on the web (Bapna et al., 2022), there are around 7000+ languages spoken around the world. So the remaining 5500 languages with little or no presence online are potential candidates for our target language.4

As expected, for these languages there is very little data available that has been used in standard LLM pipelines. Interestingly, the resources that are available for these languages are similar to those humans might use to learn a second language (L2) including:5

a list of words and their translations to learn a language’s vocabulary;

paired sentences to learn about word usage, word order, and some morphology;

a grammar book to study the structure of the language.

Let’s take a look at how these resources can be used by LLMs:

1. A Bilingual Word List

While lists of words and their translation only teach us a limited amount about a new language, they are available for a huge number of languages thanks to projects such as PanLex. However, for many languages, they only contain translation pairs for a small number of terms such as the numbers from 1–10.

Bilingual lexicons have been a core resource for aligning word embeddings across languages (Ruder et al., 2019). They also have been used to bootstrap unsupervised MT systems (Artetxe et al., 2018), though, translating text word-by-word does not get you far, even if you account for word order differences (Lample et al., 2018).

On the other hand, even in the best LLMs, cross-lingual alignment of words in the vocabulary is still not perfect. Bilingual lexicons are thus a potential viable—and large-coverage—form of supervision. There are multiple ways in which they can be used with LLMs:

data augmentation: “noisy” code-mixed samples are created by replacing words in source language sentences with their target language translations (Wang et al., 2022, Reid & Artetxe, 2022);

lexical prompting: prepending

<source word, translation>pairs to the prompt for source words that occur in the input (Ghazvininejad et al., 2023);parallel data:

<source word, translation>pairs are treated as “sentence” pairs and added to the existing parallel data.

Jones et al. (2023) compare several augmentations. They find that they provide similar gains for unsupervised machine translation and that high-quality bilingual lexicons such as GATITOS are crucial. Recently, Koto et al. (2024) use PanLex to extend sentiment lexicons to more languages.

2. Few Parallel Sentences

Parallel data is the bread and butter of MT research. MT models and LLMs are trained on millions of parallel sentences. Prior work on low-resource MT such as for Nepalese–English used 100,000s of parallel sentences (Guzmán et al., 2019).

More recent work studied the impact of around 1–6k professionally translated sentences across different low-resource languages (Maillard et al., 2023). They find that already 1,000s of high-quality parallel sentences are helpful while multilingual training and back-translation are crucial to achieve good performance.

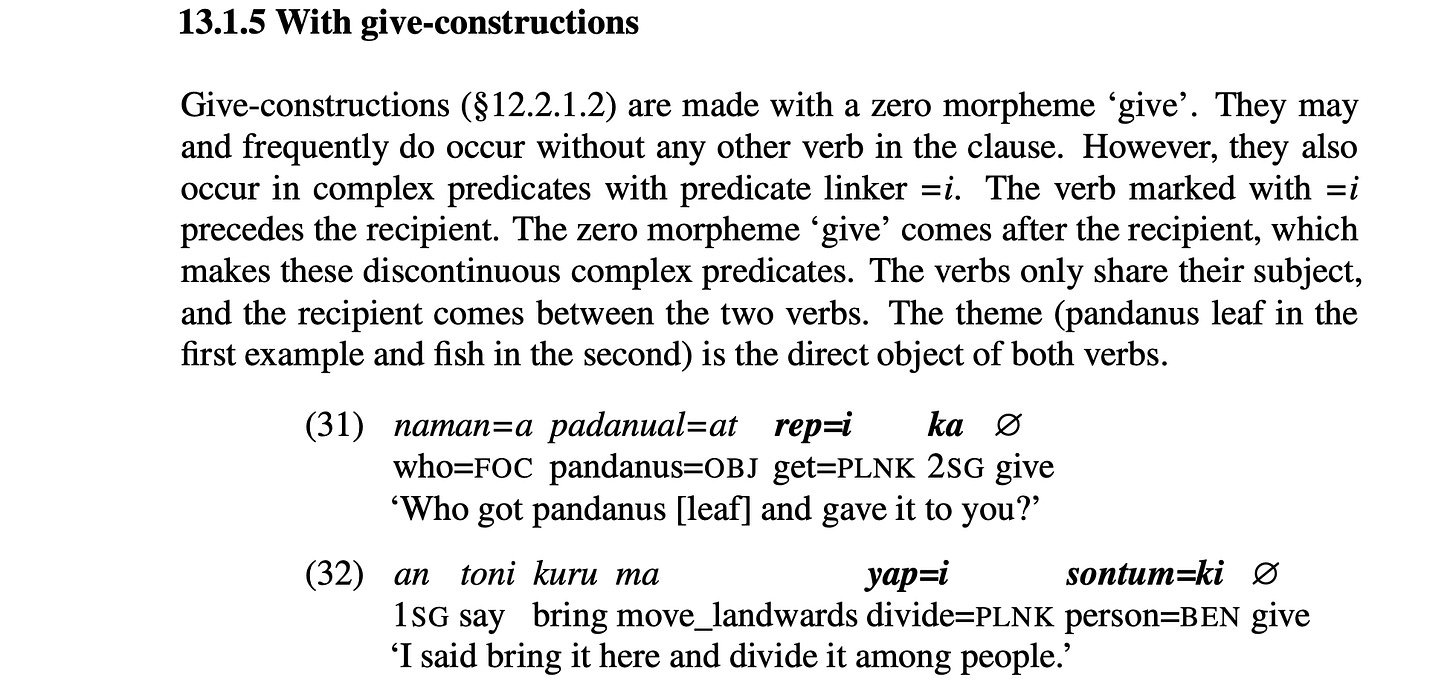

But what can you do if you only have very few parallel sentences for a language? Such scenarios are common in the form of Rosetta Stone puzzles in Linguistic Olympiads.

These puzzles are designed so that only a minimum number of parallel sentences are required to deduce the relevant translations to and from the target language (Chickasaw above). Sahin et al. (2020) collected 100 such puzzles. LLMs such as GPT-3.5 Turbo still fall short of solving these puzzles with an Exact Match score of around 20% and even advanced prompting methods are not helpful (Lin et al., 2023).

3. A Grammar Book

While both bilingual lexicons and parallel sentences are well-known data sources for training MT models, grammar books are much less common. A reference grammar is a key product of linguistic fieldwork, which can also result in other artifacts such as language learning materials including alphabet books, learners’ guides, etc.

Many reference grammars are available as books in pdf format. As part of sourcing open-source OCR data from Google Books for XTREME-UP (Ruder et al., 2023), we came across many public-domain books in under-represented languages that described the grammar or vocabulary of the language and were created as part of missionary efforts and linguistic fieldwork.6

Compared to these, A grammar of Kamalang (Visser, 2022) used in MTOB is a more recent reference grammar. Reference grammars are publicly available in 100s of languages and present a promising and—with exception of MTOB—untapped resource for studying the language acquisition of LLMs.

4. Putting Everything Together: MTOB

MTOB (Machine Translation from One Book; Tanzer et al., 2023) is a recent dataset that provides the three above resources for Kalamang, an endangered language spoken by less than 200 people7, which is essentially absent from pre-training corpora.

In addition, it is not closely related to other languages with many speakers (which is important to measure true zero-shot performance) and uses the Latin script, which makes it easy to process with LLMs. The authors obtained the permission of the Kalamang-speaking community for using their data, which is crucial for this type of work.

Compared to standard MT evaluation where native speaker translations are the benchmark to beat, producing native-level translations may not be feasible using only the provided resources. The authors thus provide another human baseline: The first author Garrett Tanzer learned how to translate Kalamang from scratch by reading the grammar book for 10+ hours and then used the parallel data and the Internet as reference when performing the translation task over the course of several weeks. That is dedication to human evaluation!

In their evaluation, all LLMs underperform the human baseline, with Claude 2 performing best in comparison. Recently, Gemini 1.5 Pro using its extremely long context window is able to use in-context learning on the Kalamang resources to improve substantially on the English->Kalamang translation task. Let’s look at what these results mean for the field overall and for under-represented languages in particular.

Future Impact

Long Context Modeling

It’s quite remarkable how long-context models have progressed over the last years. Being able to translate into a new language using only in-context learning based on existing linguistic resources is an impressive feat. Nevertheless, parameter-efficient fine-tuning and retrieval-augmented generation (RAG) are important baselines for this setting that can put the in-context learning performance in perspective.

Long Context Datasets

While many challenging long-context benchmarks are synthetic in nature, including the most challenging tasks in Long Range Arena (Tay et al., 2021) and the needle-in-the-haystack tasks in the Gemini 1.5 evaluation, datasets like MTOB provide a more realistic setting using standard linguistic resources. In addition, they enable comparison to a human baseline and to a (potentially unattainable) human native speaker. Given the increasing popularity of long-context models, I hope we see more long-context datasets grounded in realistic data and human-level comparisons.

Under-represented Languages

While these results highlight the potential of LLMs to learn to translate with very little data, many under-represented languages are primarily spoken; they do not have a written tradition or a standardized orthography. Text-based NLP technology is thus of limited use to them. For these languages, multi-modal LLMs will be an important foundation. Given the powerful capabilities of LLMs, we should design evaluations with the needs of the language communities in mind. A promising area is to support ‘contact languages’ including creoles and regional varieties of standardized languages (Bird, 2022).

NLP and Cognitive Science

In order to study language acquisition in language models, benchmarks such as the BabyLM Challenge employ a developmentally plausible corpus including mostly transcribed speech and a limited number of tokens. However, the embodied, interactive, and multi-modal nature of first language (L1) acquisition is challenging to replicate with current models. L2 acquisition in the form of true zero-shot MT may be a more accessible testbed to study how a model learns a new language based on limited linguistic resources.

Interpretability and Model Understanding

Grammar books and language learning resources similarly provide a means to analyze how LLMs acquire new information and how they use their existing knowledge to reason over new inputs. They can be used to better understand models’ inner workings via tasks such as grammar induction (Kim et al., 2020). For instance, an interesting question is whether the sentences a model retrieves via RAG are similar to those consulted by a human when translating into a new language.

NLP and Linguistics

To make most use of such resources, both in obtaining and understanding the data as well as interpreting model results requires collaborating with linguists. MTOB provides an example of how such a collaboration can look like in practice, with the linguist actively participating in the research and co-authoring the paper. Such inter-disciplinary collaborations, while challenging and complex, are often a breath of fresh air—so I hope to see more of them in the future.

Note that the term “low-resource” is typically a misnomer as many low-resource languages have data available; it may just not be easily accessible due to being in different formats, in another modality, etc. I’ll still use it here as we will strictly talk about the data available in pre-training for these languages.

We have studied transfer to languages with unseen scripts in prior work (Pfeiffer et al., 2021) though many of these scripts have likely been seen by more recent LLMs.

I use the term true zero-shot MT to contrast with zero-shot MT (Johnson et al., 2016), which refers to the setting where a language has monolingual but no parallel data in pre-training. The term also relates to the usage of the term true few-shot learning (Perez et al., 2021), which does not use any other held-out examples.

There are many factors that affect the choice of the target language for true zero-shot MT: Many languages don’t have a standardized orthography; others use a different script, which may complicate processing; connecting with native speakers and finding data may be difficult for many languages; language similarity plays an important role for learning a new language; finally, some communities do not approve the use of MT for their language.

Note that this is a text-centric perspective of zero-shot MT. In practice, for such local languages we can also expect the existence of raw speech with translations (Bird, 2022).

We excluded these books from our data as they are mainly in English with examples in the target language rather than the monolingual target language data that we wanted to collect.

Kalamang is spoken in the Western part of the island of New Guinea—part of Indonesia—while the Eastern part of the island is part of Papua New Guinea. Incredibly, Papua New Guinea and Indonesia are also the two countries with the most languages in the world. See (Koto et al., 2022) for an overview of NLP challenges for Indonesian languages and this AACL 2023 Tutorial for the current status of NLP in Southeast Asia.