The Evolving Landscape of LLM Evaluation

Navigating Evaluation Pitfalls

Edit, May 16: Added mention of Benchmarking Benchmark Leakage in Large Language Models (Xu et al. , 2024).

Throughout recent years, LLM capabilities have outpaced evaluation benchmarks. This is not a new development.1 The set of canonical LLM evals has further narrowed to a small set of benchmarks such as MMLU for general natural language understanding, GMS8k for mathematical reasoning, and HumanEval for code, among others. Recently, concerns regarding the reliability of even this small set of benchmarks have emerged.

"Datasets are the telescopes of our field."—Aravind Joshi

Without reliable benchmarks, we are effectively flying blind. With many public benchmarks no longer seen as hallmarks of objectivity, ML researchers and practitioners increasingly rely on their intuition to assess a model’s ‘vibe’, i.e., how interactions with it feel like.

Let’s explore how we got here and what the path forward may look like.

Looking Back on Benchmarking

Benchmarks have been integral to the development of ML and NLP (see my previous post for a brief history of ML benchmarking). Break-through architectures such as ResNets or Transformers first captured people’s attention through their impressive ImageNet and WMT results respectively. Benchmarks such as MNIST, CIFAR-100, and ImageNet have been around for more than a decade and are still used to date.

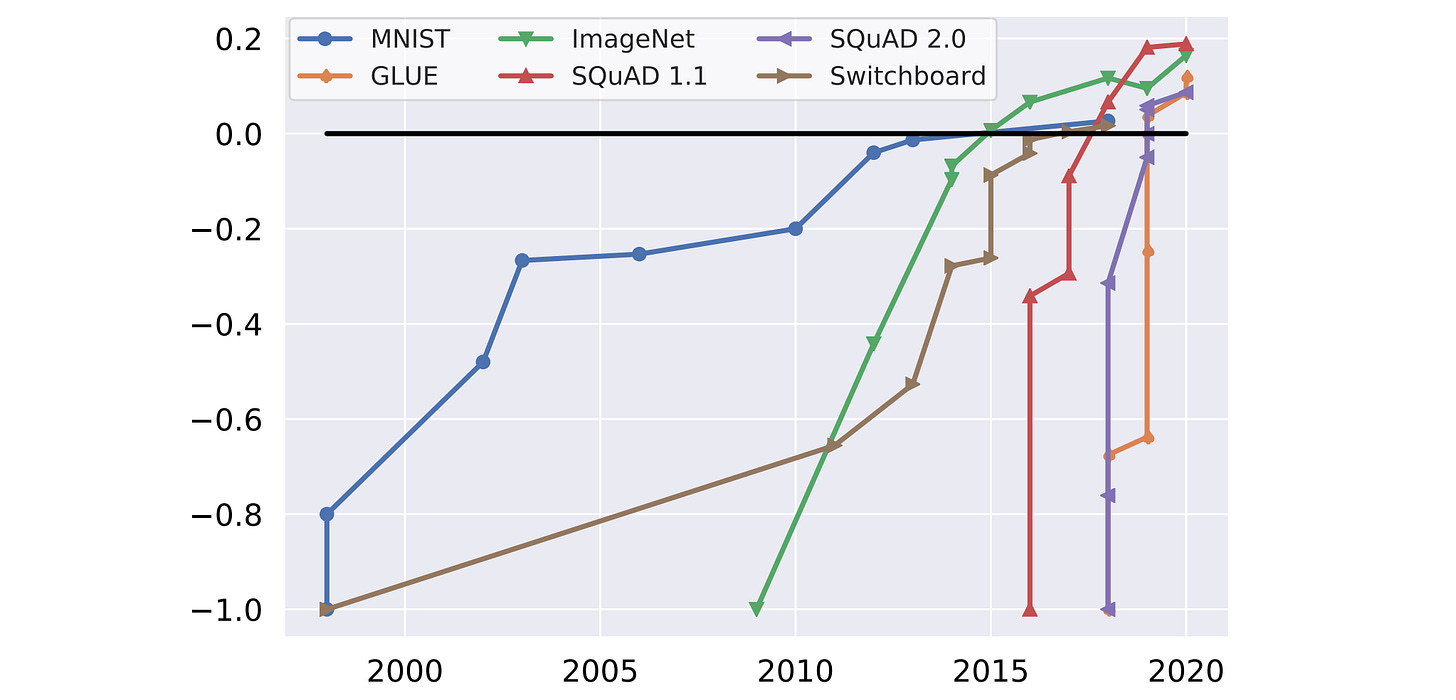

In recent years, in light of rapidly improving model capabilities, the time between a benchmark’s creation to its saturation—when model performance exceeds human performance—has dramatically reduced.

In addition to recent benchmarks more quickly saturating, two additional problems are contributing to the current benchmark crisis: memorization and overfitting.

Memorization

Most popular benchmarks are either directly available on the web or may have been uploaded in different forms on GitHub or other platforms. Current models are trained on much of the Internet, with newer models trained on more recent snapshots of CommonCrawl. Unless filtering measures are taken to specifically remove benchmark data from pre-training, models are invariably exposed to test data through their pre-training. While they may not memorize every example, training on the data makes it more likely for the model to produce the correct prediction.

As a result, LLMs including Aquila2 and Qwen models repeat verbatim training and even test examples from MATH and GSM8k. GPT models perform much better on coding problems released before their pre-training data cut-off. LLMs also perform much better on datasets released before the pre-training data cut-off—and barely improve over a majority baseline (!) on uncontaminated classification tasks in zero and few-shot settings2.

What can we do to mitigate memorization? Ravaut et al. (2024) highlight some best practices to reduce contamination in their survey:

Encrypting evaluation datasets. The authors of the MTOB dataset (discussed in a previous newsletter) did this. The downside: if the dataset is shared unencrypted at any point, it is hard to contain it again.

Scanning newly released evaluation datasets. We can make sure to only evaluate on uncontaminated data.

Preventing data leakage to closed-source APIs. Evaluating on closed-source APIs inadvertently leaks data to them whether or not the data is made available online or not.

Overfitting

With increased attention afforded to LLMs and billions of dollars in funding at play, the pressure to do well on public benchmarks has increased. A couple percentage points on an established benchmark such as MMLU can make or break an investor presentation or convince potential customers to try a model.

As a result, there is the risk of overfitting to public benchmarks if models are optimized to do well on them. One way of overfitting is through the creation of synthetic data, which may inadvertently reflect use cases in the test data rather than a broader set of model applications. For example, a recent study found that several model families such as Phi and Mistral models show evidence of systematic overfitting on the GSM8k grade school math dataset.

For benchmarks such as MT-Bench that employ a specific model (often GPT4) as evaluator, there is the additional risk of overfitting to biases of the evaluator. If we optimize our model to do well on MT-Bench, we may end up training on GPT4-created data. This can lead to higher scores on GPT4-rated evals but worse performance when tested by humans as models mimic GPT4’s style but not other aspects such as its factuality.

Training on such model-created synthetic data may thus lead to gains and improve evals in the short term; in the long term, it can lead to unanticipated biases and blind spots in the user experience.

Taking into account the possibility of both memorization and overfitting, the results on popular static evaluation benchmarks should be taken with a grain of salt.

To Vibe or Not to Vibe?

Instead of blindly trusting public benchmarks or result tables in press releases, it is thus more important than ever to run your own tests. LLMs are versatile and different people have different preferences and use cases. Utility is in the eye of the user. However, very few have the means to exhaustively evaluate and compare many different LLMs.

The currently preferred evaluation benchmark for such ‘vibe-based’ evals is Chatbot Arena, which crowd-sources user ratings in blind A/B test conversations with various LLMs. The platform enables large-scale user testing and aggregates win rates across 10,000s of conversations in a central leaderboard.

Chatbot Arena is not perfect. Humans can get fooled and may prefer a response due to a variety of factors including its formatting, style, type of humor, etc, which can be exploited and optimized for. Chatbot Arena covers a narrow range of domains and conversations wildly vary in quality. Nevertheless, it provides an uncontaminated evaluation of chat user interactions (I’ve written previously about it); you can also check out Nathan Lambert’s thoughts on Chatbot Arena in his recent post.

The Future of Evaluation

The time when benchmarks lasted multiple decades has passed. Going forward, we will rely less on public benchmark results. Instead, the ability to evaluate a model directly for a specific downstream use case will be much more important.

This requires a) knowledge on how to efficiently and robustly evaluate LLMs; b) the infrastructure to enable this; and c) domain expertise to know how the problem can be modeled using an LLM. Institutions will be less likely to share such evals given that a release means they will become contaminated, reducing their usefulness.

Benchmark creators should mitigate the risk of contamination in the design of their test data as much as possible. Internet data should be used sparingly—and not as the source of the solution. New benchmark data should ideally be created by humans from scratch.

As we already evaluate LLMs how we assess humans—on standardized exams and general aptitude and proficiency tests—we should also follow a similar design process for future LLM evals: With regularly updated tests, assuming access to all prior exam data and accounting for a willingness to exploit loopholes.

Overall, we need to rethinking the way we evaluate LLMs and shift to efficient evaluation processes that can keep track with the pace of model advances.

The AI Index Report 2021 mentions this and I’ve previously written about challenges in NLP benchmarking.

The authors examined less powerful LLMs up to GPT3.5-Turbo (March 2023 release). It is likely that more powerful LLMs would exhibit stronger zero-shot and few-shot capabilities.